Believe it or not, I have not been able to find answers to two basic questions about YouTube live-streaming. They’re issues I’ve been noticing for the last four weeks on the Silent Comedy Watch Party, and I can’t believe that one of them hasn’t been picked up on and hack-suggested.

The one I probably can’t do a darn thing about is the faint purple-green bands That are seen, from time to time (usually in a darker scene or title card) slowly rising from the bottom to the top of the frame. I’m sure this has something to do with the frame or scan rate of a video projector and the frame rate “chosen” by the iPhone’s iOS for what it deems best image. Point your smartphone, in video mode, at a computer screen or TV and you’ll probably see this.

I haven’t found a way to over-ride this.

I also haven’t found a way to over-ride the fact that when an iPhone is being used to live-stream something, the ability to over-ride the auto-exposure correction can’t be over-ridden. Let me re-phrase. Usually you can hold your finger on the screen to lock in an exposure and focus setting. When you’re live-streaming this function isn’t available.

This is why sometimes on The Silent Comedy Watch Party when a film is running, an actor’s face will appear to be washed out. If there’s a Reddit thread or YouTube help page with a workaround for this, I haven’t found it.

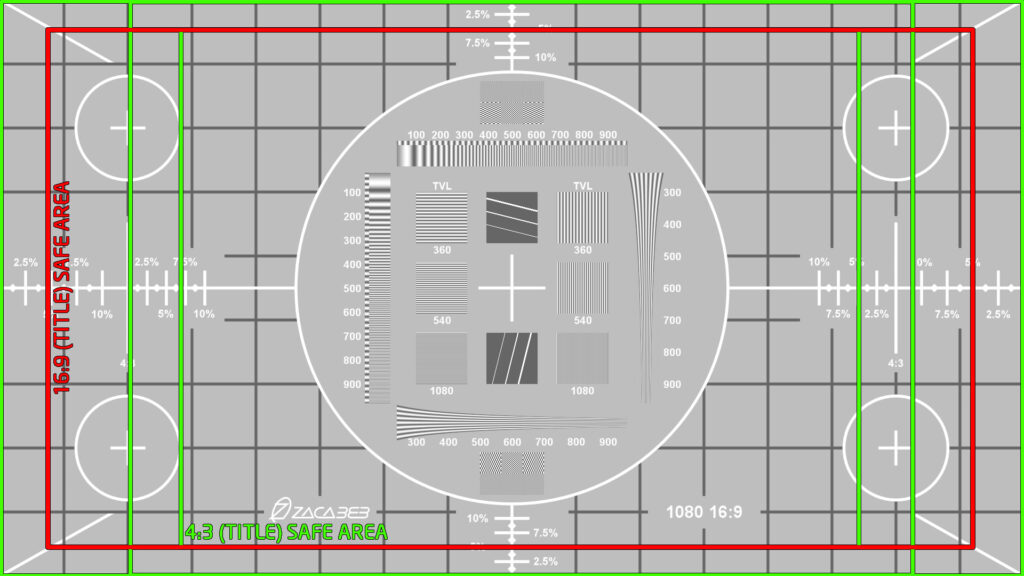

If you’ve done any work in TV production or broadcasting, you’re aware of something called “TV safe” or something like that. On regular TV, the image that’s sent out actually appears on your set slightly cropped. So when you’re creating graphics or composing shots, you deliberately allow for a little slop around the edges so nothing gets cut off when people see it at home.

I hadn’t really been putting my attention to this, but the same thing seems to happen automatically with a YouTube live video. We set up our shot and camera placement so that the video projected on the wall above my piano fills the iPhone’s screen in “video” mode. Why in the world would your smartphone suddenly start taking video or a photo in a wider shot than what you were actually looking at?

And yet, that seems to be what happens.

(If your eyes have rolled back in your head at this point,that’s okay. You don’t have to finish reading this. I’m writing this up and posting it in case anyone on the planet is searching for this issue and can now feel like ”Okay, I’m not crazy…it’s not just me.”)

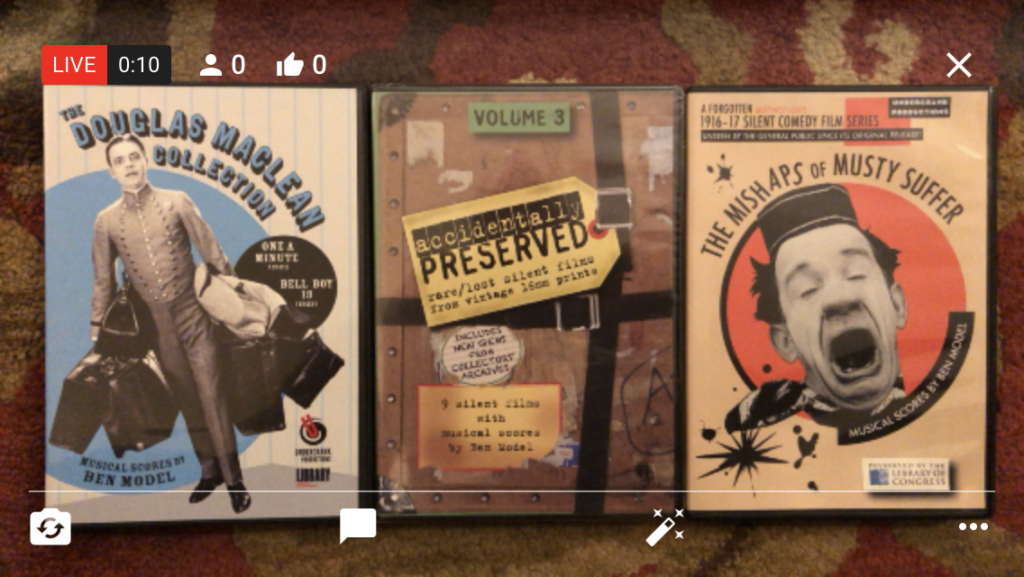

As a demo, here is what I see in my phone’s Camera app on the ”video” setting:

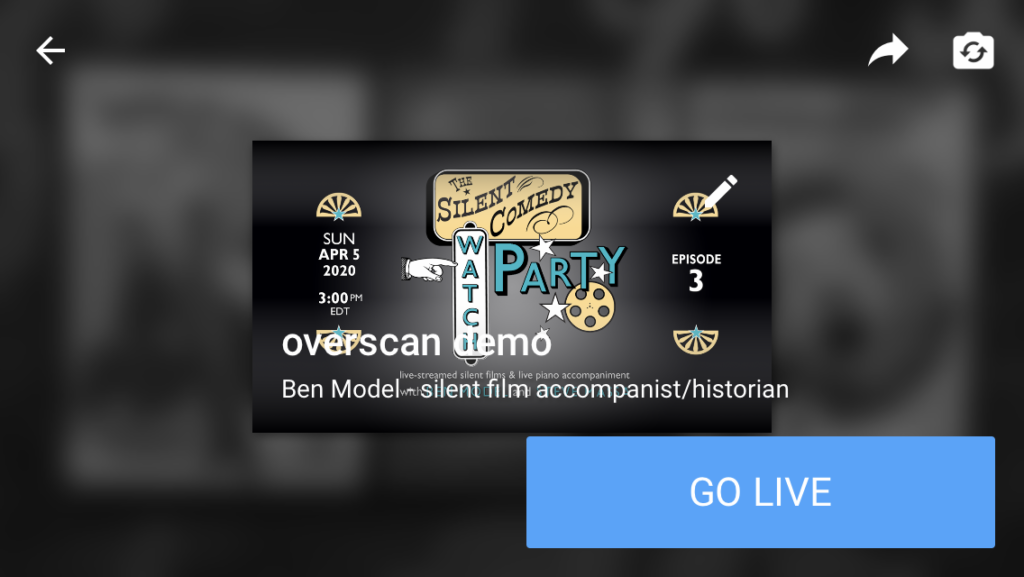

Here is what the very same camera shot and distance from camera to objects shows as a live-streamed YouTube video:

What threw me off every time is that the preview screen YouTube shows you before you GO LIVE is blurry. I’m paying attention to the big blue icon and hadn’t realized the B&W, darkened and with a major blur behind it all was the same shot everyone was going to see online. You’ll notice that the Stream’s thumbnail image in the middle is not shown with any extra border.

Clearly, as long as we’re going to continue showing the wall-projected image on The Silent Comedy Watch Party, I need to set the framing based on this B&W-dark-blurry preview image to deal with YouTube’s “TV safe” area that it adds. I’m sure this function makes complete sense to YouTube’s developers. I just wish I could find a reason for it.

If you are doing or planning to do a livestream, I hope this helps. Thanks.